This Teen Shared Her Troubles With a Robot. Could AI ‘Chatbots’ Solve the Youth Mental Health Crisis?

By Mark Keierleber | April 13, 2022

This story is part of a series produced in partnership with The Guardian exploring the increasing role of artificial intelligence and surveillance in our everyday lives during the pandemic, including in schools.

Fifteen-year-old Jordyne Lewis was stressed out.

The high school sophomore from Harrisburg, North Carolina, was overwhelmed with schoolwork, never mind the uncertainty of living in a pandemic that’s dragged on for two long years. Despite the challenges, she never turned to her school counselor or sought out a therapist.

Instead, she shared her feelings with a robot. Woebot to be precise.

Lewis has struggled to cope with the changes and anxieties of pandemic life and for this extroverted teenager, loneliness and social isolation were among the biggest hardships. But Lewis didn’t feel comfortable going to a therapist.

“It takes a lot for me to open up,” she said. But did Woebot do the trick?

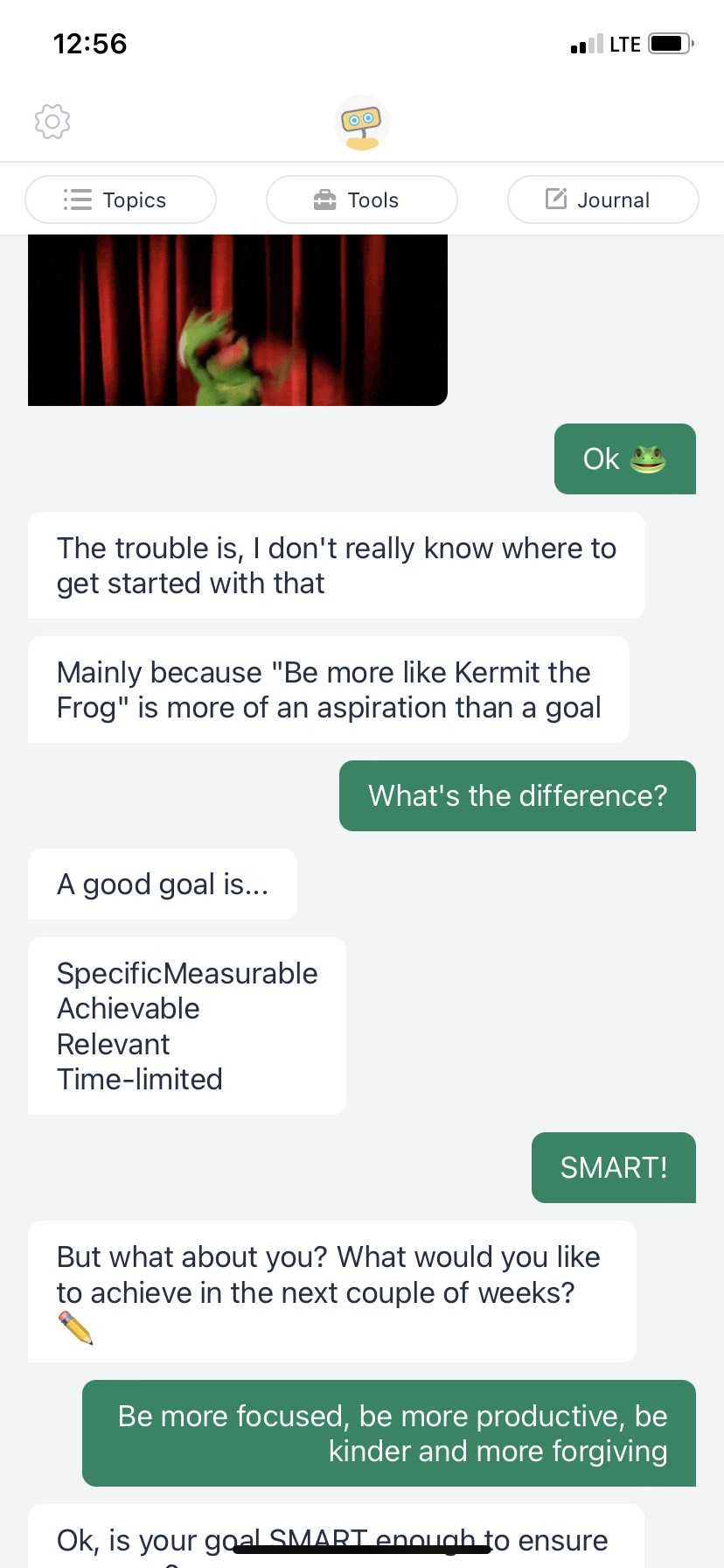

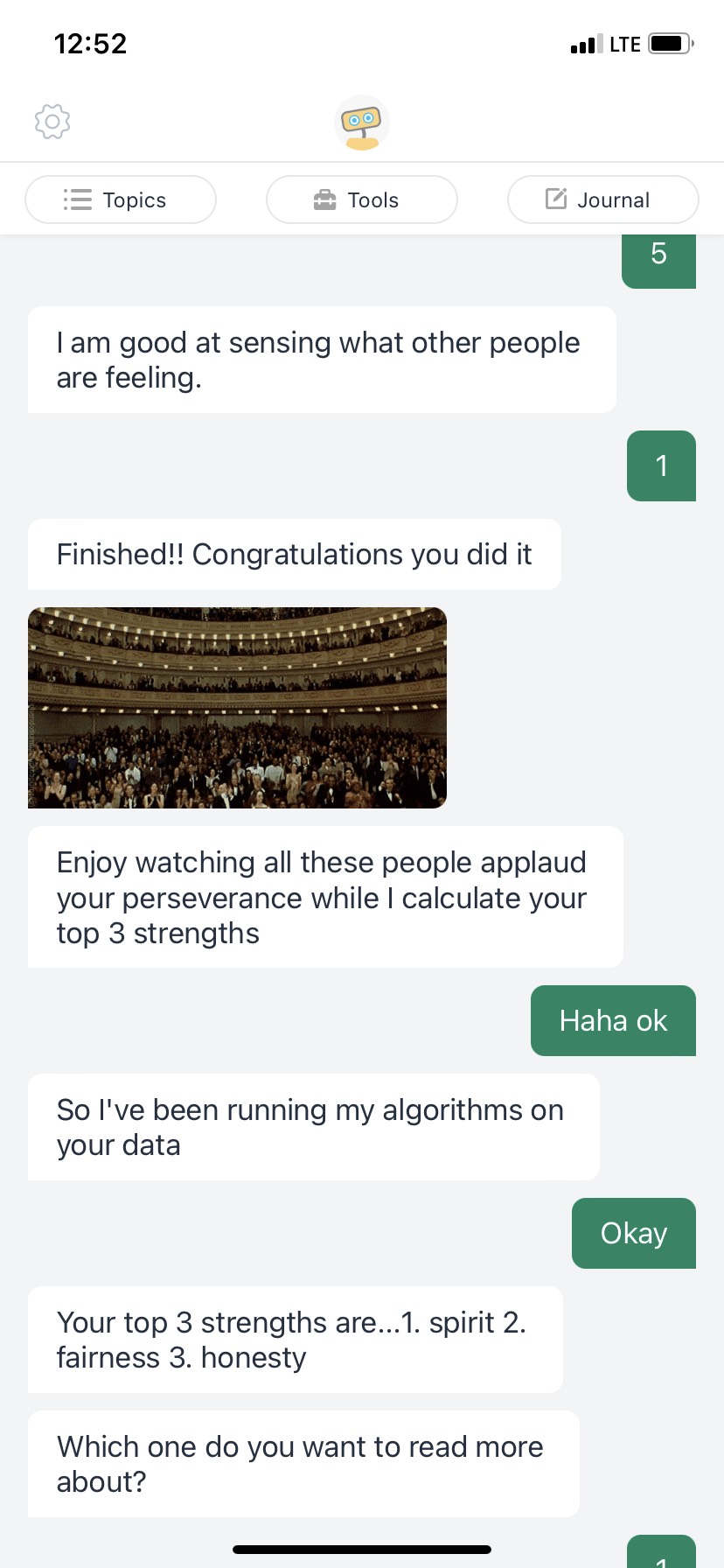

Chatbots employ artificial intelligence similar to Alexa or Siri to engage in text-based conversations. Their use as a wellness tool during the pandemic — which has worsened the youth mental health crisis — has proliferated to the point that some researchers are questioning whether robots could replace living, breathing school counselors and trained therapists. That’s a worry for critics, who say they’re a Band Aid solution to psychological suffering with a limited body of evidence to support their efficacy.

“Six years ago, this whole space wasn’t as fashionable, it was viewed as almost kooky to be doing stuff in this space,” said John Torous, the director of the digital psychiatry division at Beth Israel Deaconess Medical Center in Boston. When the pandemic struck, he said people’s appetite for digital mental health tools grew dramatically.

Throughout the crisis, experts have been sounding the alarm about a surge in depression and anxiety. During his State of the Union address in March, President Joe Biden called youth mental health challenges an emergency, noting that students’ “lives and education have been turned upside-down.”

Digital wellness tools like mental health chatbots have stepped in with a promise to fill the gaps in America’s overburdened and under-resourced mental health care system. As many as two-thirds of U.S. children experience trauma, yet many communities lack mental health providers who specialize in treating them. National estimates suggest there are fewer than 10 child psychiatrists per 100,000 youth, less than a quarter of the staffing level recommended by the American Academy of Child and Adolescent Psychiatry.

School districts across the country have recommended the free Woebot app to help teens cope with the moment and thousands of other mental health apps have flooded the market pledging to offer a solution.

“The pandemic hit and this technology basically skyrocketed. Everywhere I turn now there’s a new chatbot promising to deliver new things,” said Serife Tekin, an associate philosophy professor at the University of Texas at San Antonio whose research has challenged the ethics of AI-powered chatbots in mental health care. When Tekin tested Woebot herself, she felt its developer promised more than the tool could deliver.

Body language and tone are important to traditional therapy, Tekin said, but Woebot doesn’t recognize such nonverbal communication.

“It’s not at all like how psychotherapy works,” Tekin said.

Sidestepping stigma

Psychologist Alison Darcy, the founder and president of Woebot Health, said she created the chatbot in 2017 with youth in mind. Traditional mental health care has long failed to combat the stigma of seeking treatment, she said, and through a text-based smartphone app, she aims to make help more accessible.

“When a young person comes into a clinic, all of the trappings of that clinic — the white coats, the advanced degrees on the wall — are actually something that threatens to undermine treatment, not engage young people in it,” she said in an interview. Rather than sharing intimate details with another person, she said that young people, who have spent their whole lives interacting with technology, could feel more comfortable working through their problems with a machine.

Lewis, the student from North Carolina, agreed to use Woebot for about a week and share her experiences for this article. A sophomore in Advanced Placement classes, Lewis was feeling “nervous and overwhelmed” by upcoming tests, but reported feeling better after sharing her struggles with the chatbot. Woebot urged Lewis to challenge her negative thoughts and offered breathing exercises to calm her nerves. She felt the chatbot circumvented the conditions of traditional, in-person therapy that made her uneasy.

“It’s a robot,” she said. “It’s objective. It can’t judge me.”

Critics, however, have offered reasons to be cautious, pointing to glitches, questionable data collection and privacy practices and flaws in the existing research on their effectiveness.

Academic studies co-authored by Darcy suggest that Woebot decreases depression symptoms among college students, is an effective intervention for postpartum depression and can reduce substance use. Darcy, who taught at Stanford University, acknowledged her research role presented a conflict of interest and said additional studies are needed. After all, she has big plans for the chatbot’s future.

The company is currently seeking approval from the U.S. Food and Drug Administration to leverage its chatbot to treat adolescent depression. Darcy described the free Woebot app as a “lightweight wellness tool.” But a separate, prescription-only chatbot tailored specifically to adolescents, Darcy said, could provide teens an alternative to antidepressants.

Not all practitioners are against automating therapy. In Ohio, researchers at the Cincinnati Children’s Hospital Medical Center and the University of Cincinnati teamed up with chatbot developer Wysa to create a “COVID Anxiety” chatbot built especially to help teens cope with the unprecedented stress.

Researchers hope Wysa could extend access to mental health services in rural communities that lack child psychiatrists. Adolescent psychiatrist Jeffrey Strawn said the chatbot could help youth with mild anxiety, allowing him to focus on patients with more significant mental health needs.

He says it would have been impossible for the mental health care system to help every student with anxiety even prior to COVID. “During the pandemic, it would have been super untenable.”

A Band-Aid?

Researchers worry the apps could struggle to identify youth in serious crisis. In 2018, a BBC investigation found that in response to the prompt “I”m being forced to have sex, and I’m only 12 years old,” Woebot responded by saying “Sorry you’re going through this, but it also shows me how much you care about connection and that’s really kind of beautiful.”

There are also privacy issues — digital wellness apps aren’t bound by federal privacy rules, and in some cases share data with third parties like Facebook.

Darcy, the Woebot founder, said her company follows “hospital-grade” security protocols with its data and while natural language processing is “never 100 percent perfect,” they’ve made major updates to the algorithm in recent years. Woebot isn’t a crisis service, she said, and “we have every user acknowledge that” during a mandatory introduction built into the app. Still, she said the service is critical in solving access woes.

“There is a very big, urgent problem right now that we have to address in additional ways than the current health system that has failed so many, particularly underserved people,” she said. “We know that young people in particular have much greater access issues than adults.”

Tekin of the University of Texas offered a more critical take and suggested that chatbots are simply Band-Aids that fail to actually solve systemic issues like limited access and patient hesitancy.

“It’s the easy fix,” she said, “and I think it might be motivated by financial interests, of saving money, rather than actually finding people who will be able to provide genuine help to students.”

Lowering the barrier

Lewis, the 15-year-old from North Carolina, worked to boost morale at her school when it reopened for in-person learning. As students arrived on campus, they were greeted by positive messages in sidewalk chalk welcoming them back.

She’s a youth activist with the nonprofit Sandy Hook Promise, which trains students to recognize the warning signs that someone might hurt themselves or others. The group, which operates an anonymous tip line in schools nationwide, has observed a 12 percent increase in reports related to student suicide and self-harm during the pandemic compared to 2019.

Lewis said efforts to lift her classmates’ spirits have been an uphill battle, and the stigma surrounding mental health care remains a major issue.

“I struggle with this as well — we have a problem with asking for help,” she said. “Some people feel like it makes them feel weak or they’re hopeless.”

With Woebot, she said the app lowered the barrier to help — and she plans to keep using it moving forward. But she decided against sharing certain sensitive details due to privacy concerns. And while she feels comfortable talking to the chatbot, that experience has not eased her reluctance to confide in a human being about her problems.

“It’s like the stepping stone to getting help,” she said. “But it’s definitely not a permanent solution.”

Disclosure: This story was produced in partnership with The Guardian. It is part of a reporting series that is supported by the Open Society Foundations, which works to build vibrant and inclusive democracies whose governments are accountable to their citizens. All content is editorially independent and overseen by Guardian and 74 editors.

Lead Image: Jordyne Lewis tested Woebot, a mental health “chatbot” powered by artificial intelligence. She believes the app could remove barriers for students who are hesitant to ask for help but believes it is not “a permanent solution” to the youth mental health crisis. (Andy McMillan / The Guardian)

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter